Time to check in on the big AI Art conflict. Back in August I wrote an article here telling everyone not to panic over AI Art. I was very concerned to talk to multiple artists close to me who had felt despair to the point of thoughts of self-harm over the debate brewing over AI Art and the very nasty debate surrounding the issue. I still stand by that article. I don’t think artists should be despairing, panicked, or giving up art over this tech, as I still believe it will simmer down into a legal and manageable form that will ultimately be another tool in the toolbox of many artists. But even if we don’t panic, a level of concern and pushback IS warranted. I have been very pleased to see strong lawsuits coming from Artists in our community and giant corporations like Getty Images (not just once, but twice!). This tech can be used ethically, but these first platforms are getting in fast and trying to get away with whatever they can while the law catches up, and I applaud all the work that artists like Karla Ortiz, Kelley McKernan, and Sarah Anderson are doing to hold the line against the copyright infringement inherent in these platforms. Meanwhile it has been very gratifying to see professional Art Organizations and my Art Director and Creative Director colleagues in publishing and many other industries push back against using these platforms (no, not all of them, but many, and more every day). A big round of applause also to the Concept Art Association for raising over $200k to fight these platforms politically.

Meanwhile I think it’s important to realize that there’s as big a backlash against these infringements from within the Tech world as there is from the Art world. Without blowing anyone’s professional cover, I will say that I am lucky to have a very close friend whose degree and profession is in machine learning and has been keeping me up to date on the equivalent level of horror in her world against the unethical scraping these companies are doing. (Remember, “AI” sounds catchier but that’s one of the language tricks used to muddy the issue. These are not Artificial Intelligences by anyone’s actual definition.) Folks who study in this realm of technology are required to take ethics classes specifically to educate them on how to gather data responsibly and use it ethically. And a lot of the fancy big words and denials these founders keep talking about in their interviews don’t hold water to anyone that works with this technology. They say they couldn’t scrape data to leave out copyrighted material, but that is easily disproven by the fact that all the AI music generators know quite well to avoid copyrighted material. These platforms were much more scared of Big Record Label Lawyers than anyone defending artists and imagery. It will be shown how easy it is to train these algorithms ethically once they are legally forced to do so. And there is a very large movement on the ethical side of the tech world that are already working on ways to do it, and to hamper the misuse of this technology.

Last week, in case you missed it, there was a great article in the New York Times about a project at the University of Chicago to protect artists’ images online.

“Artists are afraid of posting new art,” the computer science professor Ben Zhao said. Putting art online is how many artists advertise their services but now they have a “fear of feeding this monster that becomes more and more like them,” Professor Zhao said. “It shuts down their business model.”

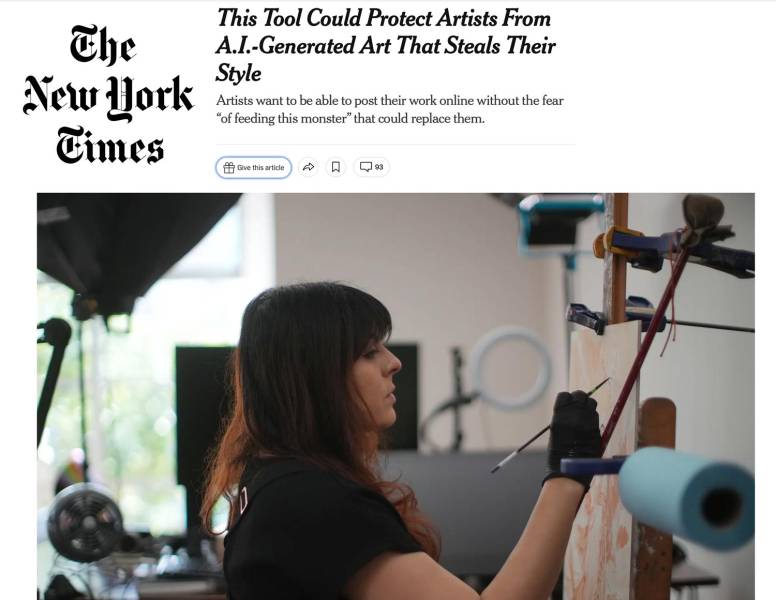

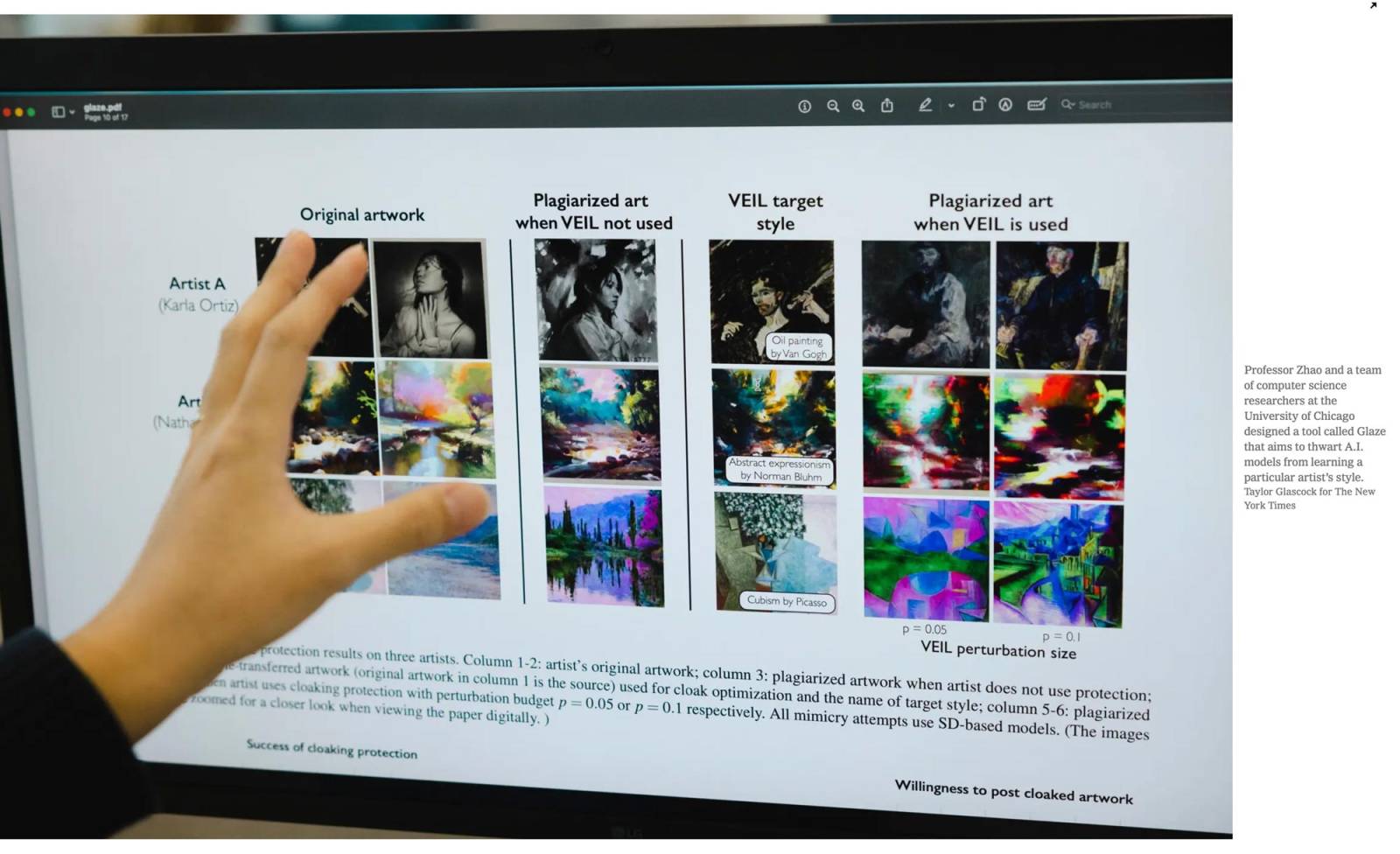

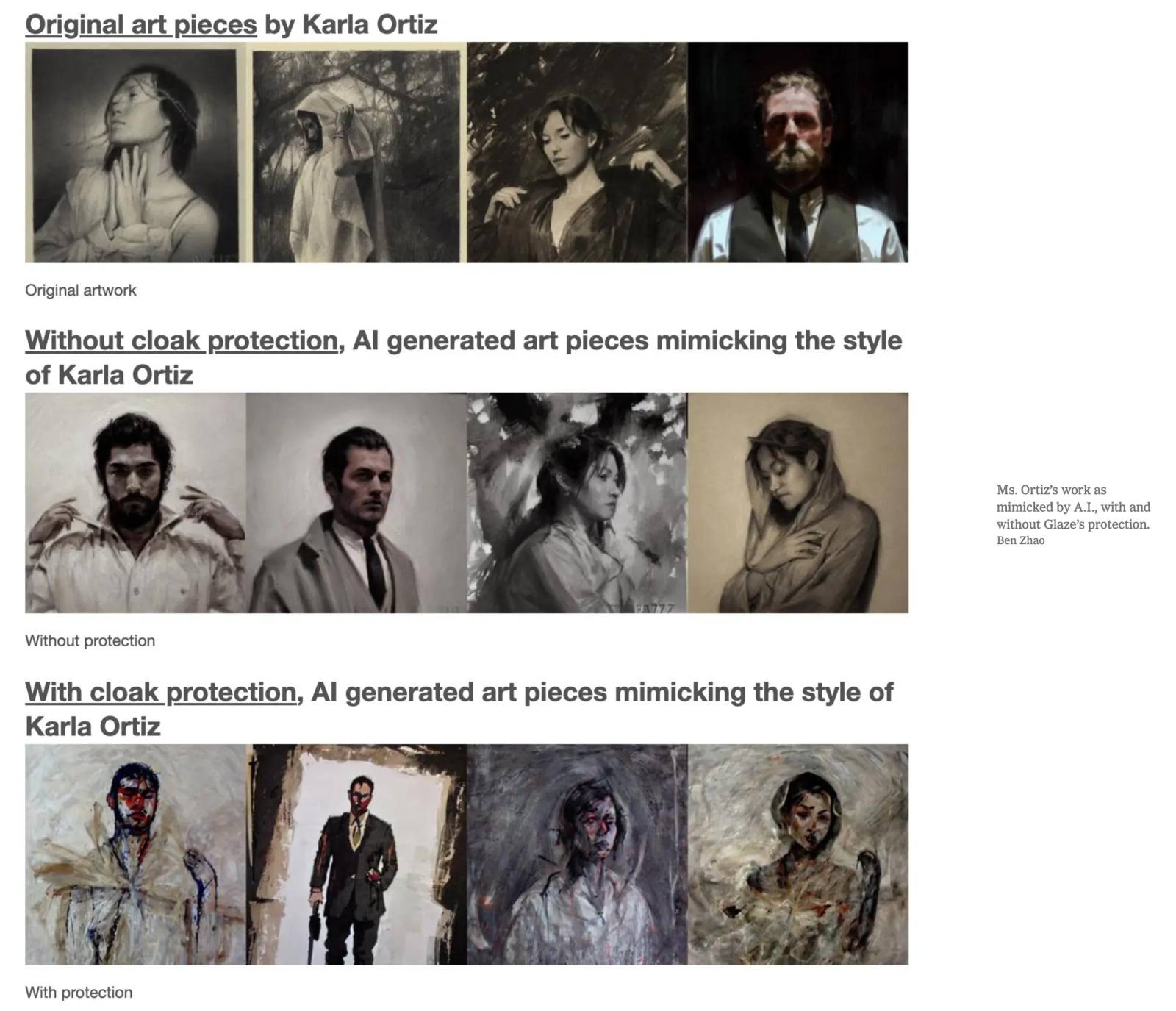

That led Professor Zhao and a team of computer science researchers at the University of Chicago to design a tool called Glaze that aims to thwart A.I. models from learning a particular artist’s style. To design the tool, which they plan to make available for download, the researchers surveyed more than 1,100 artists and worked closely with Karla Ortiz, an illustrator and artist based in San Francisco.”

(here’s a gift link to the NYTimes article that should get you past any paywalls)

This new program, called Glaze, creates a watermark that the human eye can’t see but confuses any scraping program looking for visual data for these platforms.

Of course, Glaze puts the burden of protection on the artists, and it doesn’t help defend old images that have already been scraped. And this is addressed in the article. But this is just the beginning of the Ethical Tech response. Expect to see more robust protections and blockers rolling out over the coming months. Already in the works are more thorough and robust ways of embedding unwipeable metadata and invisible watermarks onto images. I wouldn’t be surprised to see these functions quickly become baked into our usual exporting workflows in programs like Photoshop and Procreate.

I will say, it was gratifying to hear that many users were complaining about losing the ability to copy Greg Rutkowski’s style:

“One service already seems to have done something along these lines. When Stability AI released a new version of Stable Diffusion in November, it had a notable change: the prompt “Greg Rutkowski” no longer worked to get images in his style, a development noted by the company’s chief executive Emad Mostaque.

Stable Diffusion fans were disappointed. “What did you do to greg,” one wrote on an official Discord forum frequented by Mr. Mostaque.”

Just goes to prove the point that it’s not the algorithm, it’s what it’s trained on that matters.

So carry on, don’t despair, and keep making your art.

All images from the NYTimes article

The novelty seems to be wearing off already for non-artists and there’s a lot of grumbling about AI trash filling up image sites.

The best way to make your art style AI-proof is to have the characters you draw actually doing something, instead of just standing there posing, or having multiple characters interacting with each other. The AI can’t handle that at all.

Thanks for the update on AI. Has anyone coined a term for AI image generators that more accurately describes what they are, and also highlights the fact that they need to be fed loads of work by living artists in order to make anything that looks like a contemporary illustration?

Thank you so much for this article; it was such a much-needed relief to read it brought me to tears. I’m an artist that has considered self-harm in the wake of the rise of image-generators. I have a history of suicide attempts and needing crisis care but I’d been doing better in the last few years until this. It’s been extremely painful to weather such an unprovoked assault on who and what I am. This was the first time I really felt a bit of hope.

I am glad to see there is effective pushback happening to the “AI” image theft going on. While the images I’ve seen can range from lovely to horrifying they are not ‘art’ the way photos can be – and there was a lot of screaming about ‘a photo is just a mechanically generated image’ too when the technology got going. Is it cheating to use photos as reference for illustrations? No, it isn’t. Eventually those weird images *might* work as a springboard for ideas.

I’ve seen an illustrator I really like use one of the engines to generate images she then drew over and used as the basis for a series of ‘comic’ pages. Since she was using the images as a basis for her own work, modifying them (especially with the text balloons) It doesn’t bother me too much – especially since her posts of the images loudly stated “I started with AI images, then modified them.” She also tried to use one of the engines to generate images of a cartoon character she won a Hugo for – simple enough, and every attempt was an Epic Fail to even get close. Of course, the more determined scumbags will keep trying.

Mind, I think of entirely too much “Fine Art ™” as merely clever marketing, and have seen presentations that have proven my cynicism about the ‘freaky weirdness pretending to be art’ is justified. Dammit. Not from the artists here on Muddy Colors, though the so-called Fine Artists ™ who have figured out how to convince people to spend silly amounts of money on tangled string arranged on packing crates sold to them as Art have the gall to complain about “mere illustrators not being artists”. I wish I was making that up too, about the string and packing crates.

I’m a computer scientist as well as an artist, and I agree with a lot of the points you make. Thanks for sharing this! People need to know they don’t need to freak out about it. Good composition, faces, and hands are things AIs struggle with.

https://www.pcgamer.com/pathfinder-maker-bans-ai-generated-art-text-in-its-games/